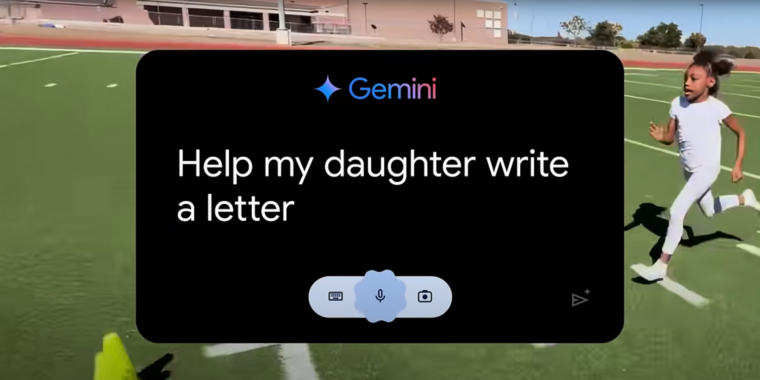

If you’ve watched any Olympics coverage this week, you’ve likely been confronted with an ad for Google’s Gemini AI called “Dear Sydney.” In it, a proud father seeks help writing a letter on behalf of his daughter, who is an aspiring runner and superfan of world-record-holding hurdler Sydney McLaughlin-Levrone.

“I’m pretty good with words, but this has to be just right,” the father intones before asking Gemini to “Help my daughter write a letter telling Sydney how inspiring she is…” Gemini dutifully responds with a draft letter in which the LLM tells the runner, on behalf of the daughter, that she wants to be “just like you.”

I think the most offensive thing about the ad is what it implies about the kinds of human tasks Google sees AI replacing. Rather than using LLMs to automate tedious busywork or difficult research questions, “Dear Sydney” presents a world where Gemini can help us offload a heartwarming shared moment of connection with our children.

Inserting Gemini into a child’s heartfelt request for parental help makes it seem like the parent in question is offloading their responsibilities to a computer in the coldest, most sterile way possible. More than that, it comes across as an attempt to avoid an opportunity to bond with a child over a shared interest in a creative way.

“Dear Sydney” presents a world where Gemini can help us offload a heartwarming shared moment of connection with our children.

This is the problem I’ve had with the LLM announcements when they first came out. One of their favorite examples is writing a Thank You note.

The whole point of a Thank You note is that you didn’t have to write it, but you took time out of your day anyways to find your own words to thank someone.

This is one of the weirdest of several weird things about the people who are marketing AI right now

I went to ChatGPT right now and one of the auto prompts it has is “Message to comfort a friend”

If I was in some sort of distress and someone sent me a comforting message and I later found out they had ChatGPT write the message for them I think I would abandon the friendship as a pointless endeavor

What world do these people live in where they’re like “I wish AI would write meaningful messages to my friends for me, so I didn’t have to”